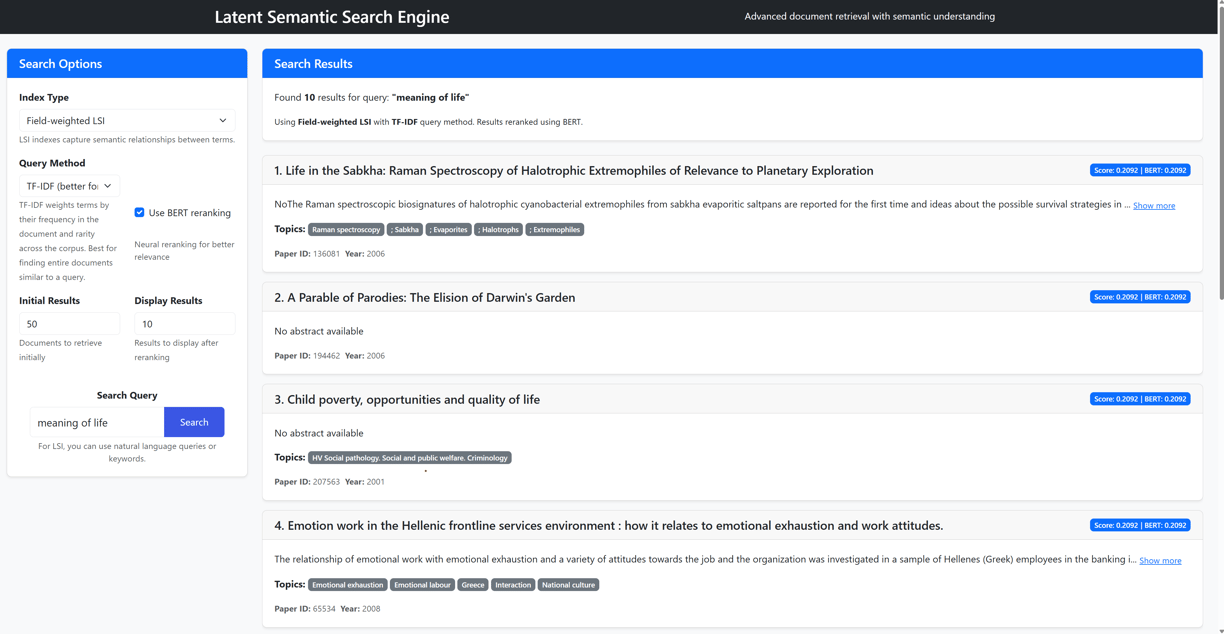

A sophisticated search engine that provides semantic search capabilities over academic papers, combining the power of Latent Semantic Indexing (LSI) with modern BERT-based neural language models to address synonymy and polysemy challenges in scholarly literature retrieval.

Latent Semantic Index SearchEngine

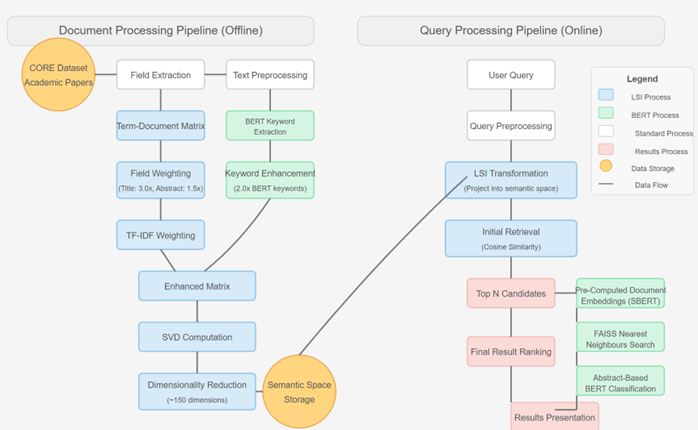

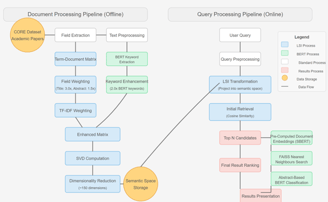

This search engine implements a hybrid two-stage architecture that combines field-weighted Latent Semantic Indexing (LSI) with BERT-based neural language models.

Stage 1 (Document Processing) uses field-weighted LSI enhanced with KeyBERT keyword extraction for efficient candidate selection, applying higher weights to:

Keywords (3.0x),

document titles (3.0x) and

abstracts (1.5x)

Stage 2 (query processing) provides

optional BERT-based semantic re-ranking using Sentence-BERT embeddings

with FAISS (Facebook AI Similarity Search) for approximate nearest neighbor search,

followed by a fine-tuned BERT classifier that scores document relevance.

Evaluation Results

Project Overview

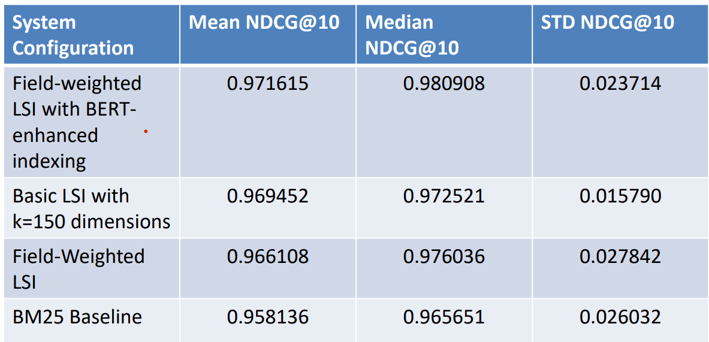

The following indexes were built for comparison:

BM25: Traditional probabilistic ranking baseline

Basic LSI: Standard Latent Semantic Indexing with uniform weighting

Field-Weighted LSI: Enhanced LSI with title/abstract/body field weighting

BERT-Enhanced LSI: Field-weighted LSI with KeyBERT keyword enhancement

Complete Hybrid System: Full system with BERT-based semantic re-ranking

Our complete hybrid system was evaluated on 30 academic queries using NDCG@10 metrics with JudgeBlender relevance assessment. The field-weighted LSI with BERT-enhanced indexing configuration achieved the highest performance with a mean NDCG@10 of 0.9716, significantly outperforming traditional baselines including BM25 (0.9581) and basic LSI variants. The BERT re-ranking component, fine-tuned on 100,000 MS MARCO query-abstract pairs, demonstrated exceptional semantic similarity assessment with accuracy, precision, and recall scores all exceeding 0.986.